CES UX Cockpit Concepts 2012-2014

At Visteon, I worked within the UX innovation team to bring new ideas to life during a pivotal shift in automotive interiors—from being defined by form to being shaped by user experience. Many of the prototypes I created were both design and UX explorations, serving as research-driven studies that helped answer key questions about how emerging technologies could enable new cockpit experiences. These concepts were shown at clinics and events to spark dialogue and inspire what the future of in-car experience could be.

Project Role

Industrial Design Lead, Alias Build, Fab

Key Design Tools Used

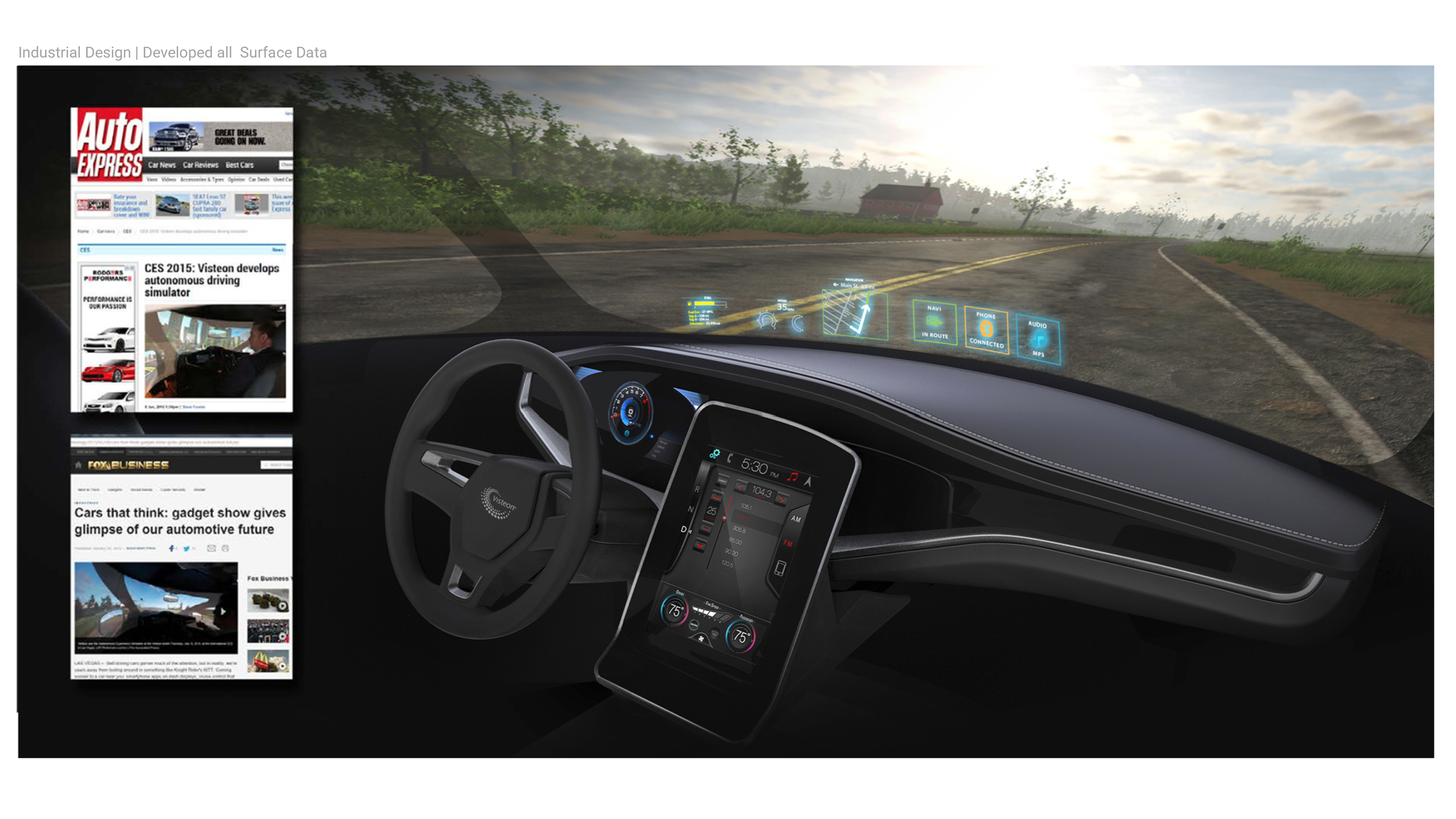

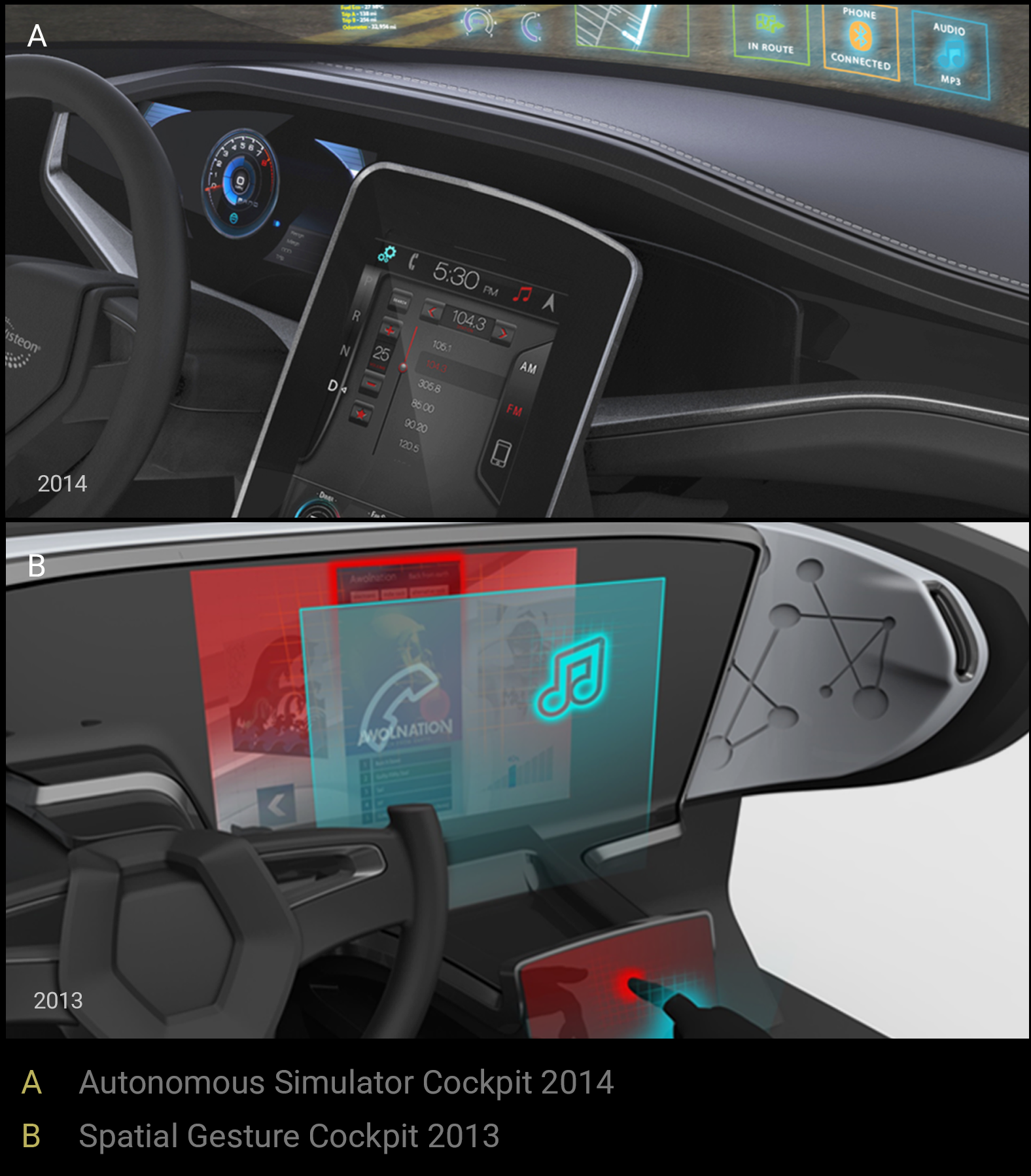

2014 Autonomous simulator

The autonomous driving simulator built on insights from the EnGuage cluster project, using consumer feedback and clinic research as its foundation. It served as an experiential tool to explore how drivers envision autonomous driving—examining the in-vehicle user experience, how time is spent when not driving, and most importantly, the transition from manual control to full autonomy.

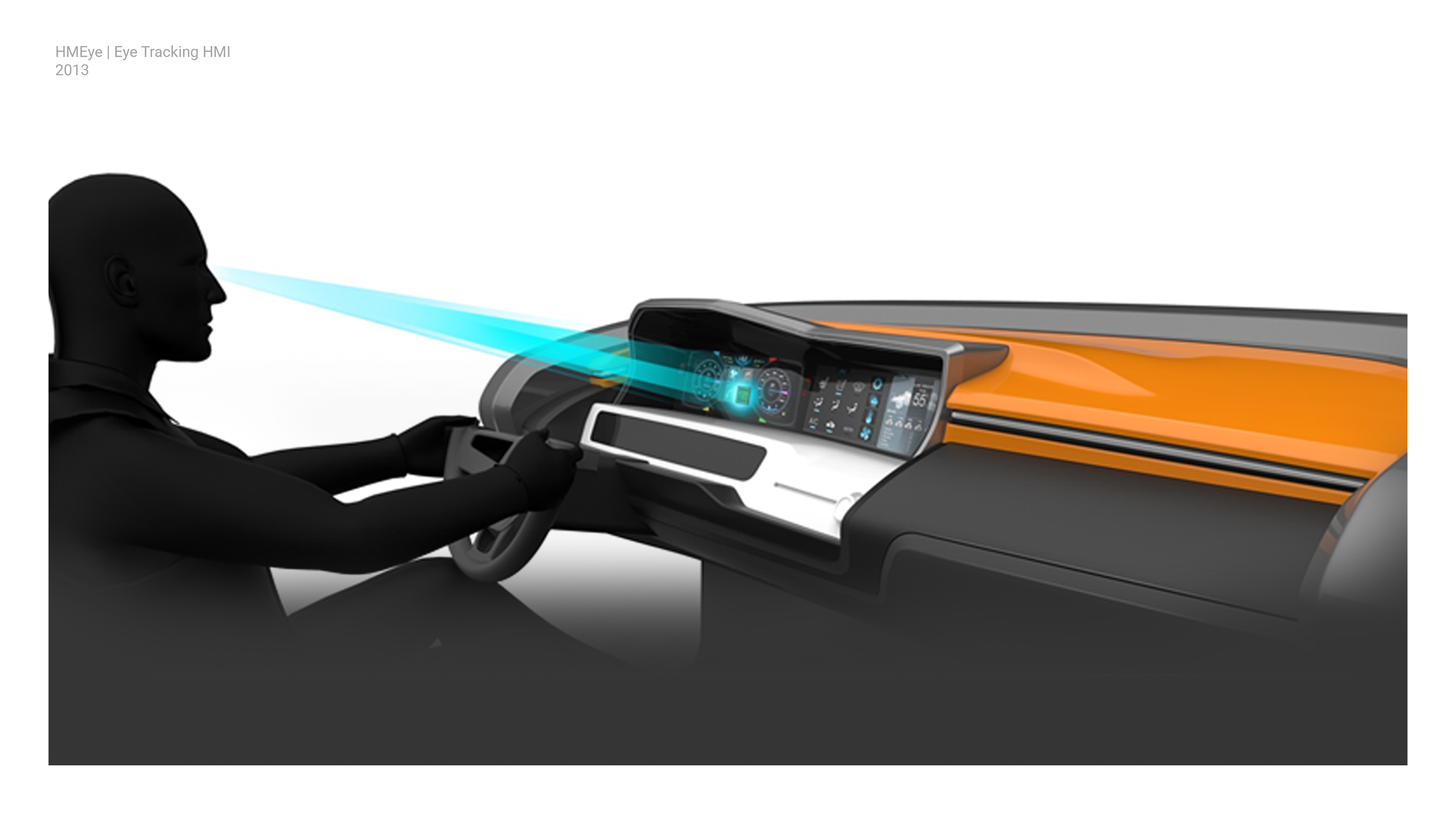

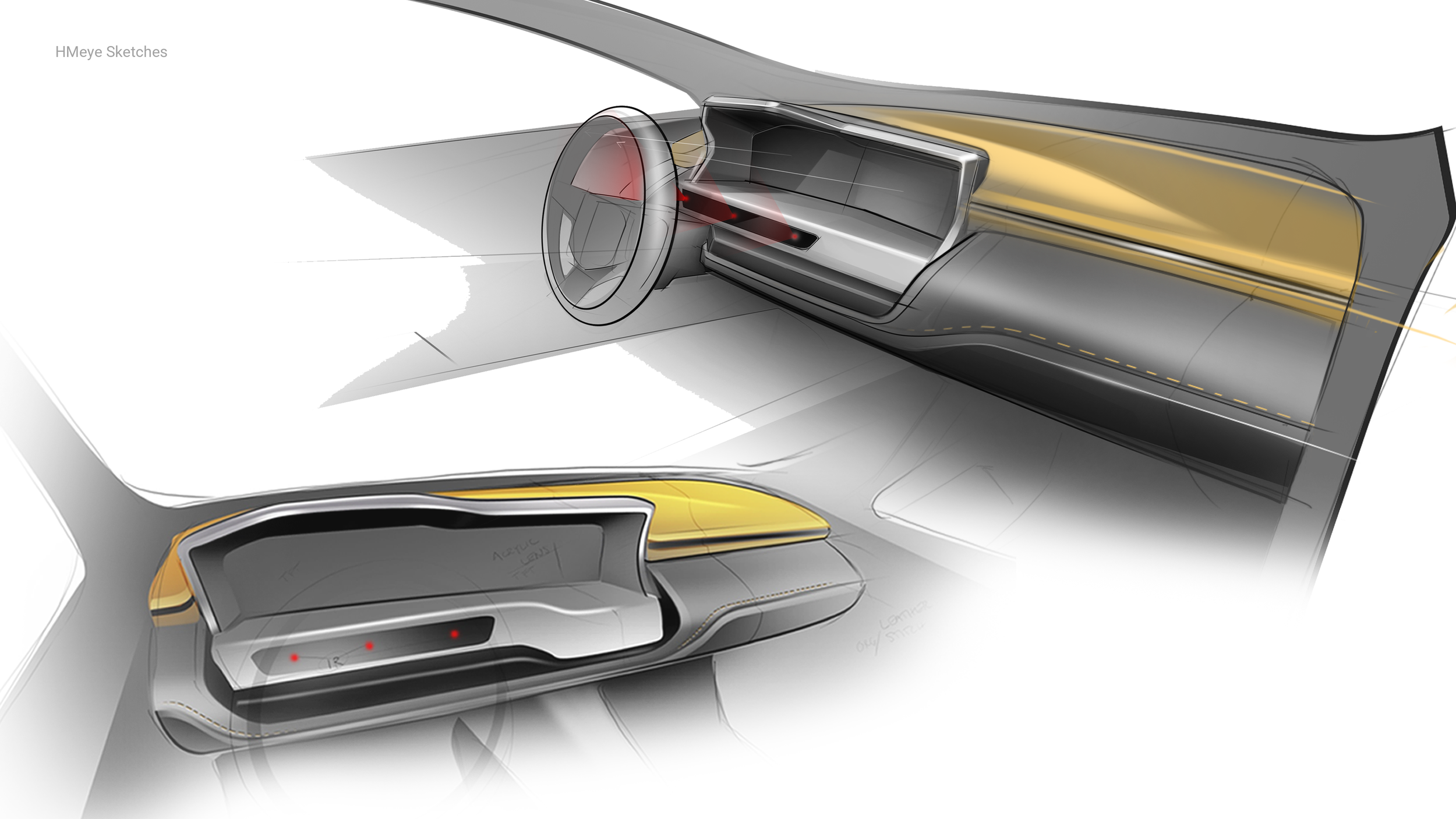

HMeye was an exploration to understand the value eye tracking technologies could bring as an input modality to the cockpit. Most of the challenge around HMeye was creating a cockpit feel and packaging large IR sensors that had line of site to the driver.

2013 HMeye

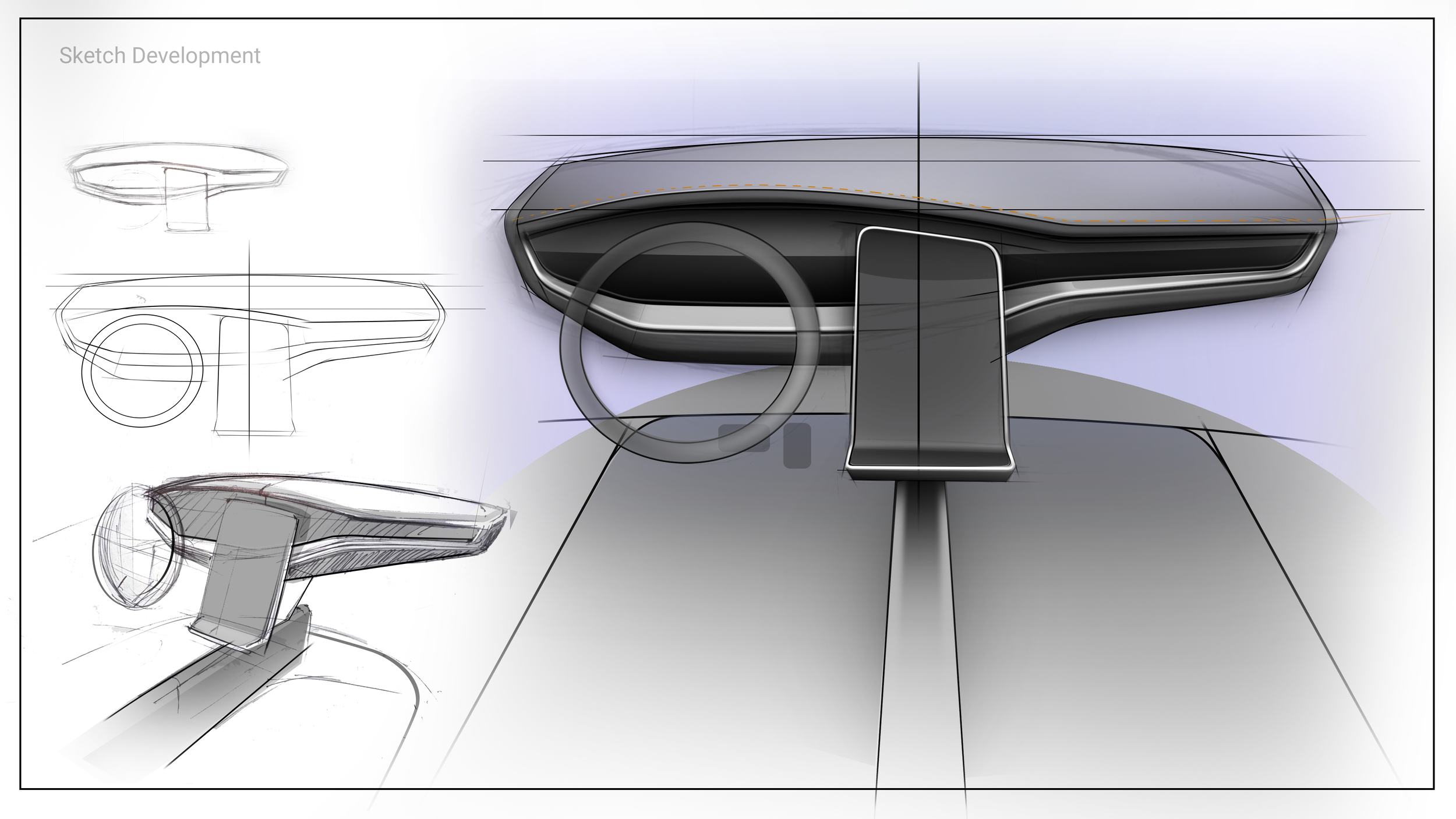

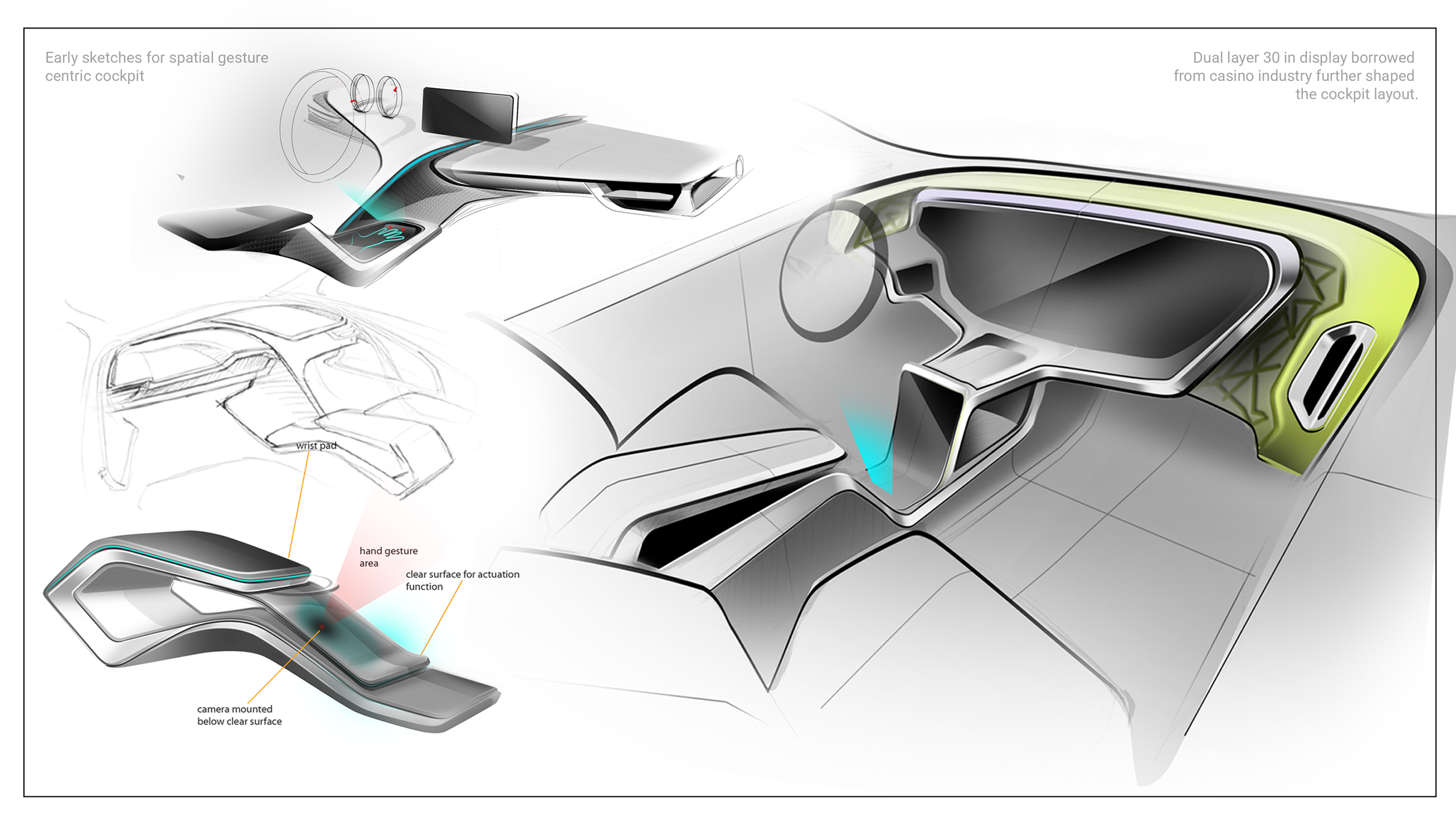

Horizon was a project around a spatial gesture input modality, and later developing a UX around a multi-layer display with the gesture input. I was tasked with continuously integrating various off the shelf tech into a cockpit like experience.